The HTTPS padlock icon on bbc.co.uk

Back in early 2015, I'd just started working at the Βι¶ΉΤΌΕΔ and whilst getting to know who's who and what's what, I discovered to my surprise that large parts of our main websites (www.bbc.co.uk and ) were only available over plaintext HTTP. My immediate thought was, "Well, here's something I can get stuck into immediately - how hard can it be to get to 100% HTTPS?".

We're now about six years on, and we're only just finishing the full migration to HTTPS. All you need for HTTPS is a vaguely modern CDN/traffic manager/server and a TLS cert plus a few changes to your HTML, right? Yeah, wouldn't it be great if things were that simple!

Setting the scene

At this stage, it probably helps to rewind a little to a circa 2015 context. It'd been about two years since the , which had helped to squash any remaining doubts as to the necessity of HTTPS. Around this time, the major web browsers began signalling their intentions to gradually ramp up the pressure on website operators to serve websites over HTTPS by restricting access to sensitive APIs to HTTPS contexts and also via changes in user interface (UI) indicators. Various platforms and services such as were created or came to prominence, making it cheaper, easier, and faster to get TLS certificates and securely serve web content. The direction of travel for the web was clear - HTTPS was gradually replacing HTTP as the default transport, but we were nowhere near as far along the road as we are now.

The Βι¶ΉΤΌΕΔ websites share a common public 'web edge' traffic management service. The web edge is similar to a CDN in that it handles TLS termination (as well as routing, caching and so on), but behind that, there are individual stacks that are managed by our Product teams - these form our , , , sites amongst others. It's fair to say in 2015, the number of our Product team's websites served over HTTPS was quite mixed - as was true of much of the internet.

Our web edge already offered HTTPS 'for free' to our Product teams in 2015. To migrate to HTTPS, our Product teams had to do the engineering work for compatibility of their websites and opt-in to an 'HTTPS allowlist' - otherwise, our web edge would force their traffic to HTTP.

Raising the issue

The first formal thing I did towards 100% HTTPS was to present to a forum which most of the Βι¶ΉΤΌΕΔ Product architects attended to raise the issue and highlight why we should migrate to HTTPS and what was going to change soon in Chrome in terms of UI signals:

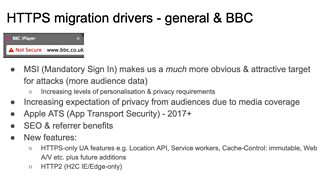

Screengrab of a presentation slide which highlights the drivers for HTTPS adoption.

The web browser UI signalling changes for plaintext HTTP were pretty new at the time and not as widely communicated as they are now. The planned UI changes were a really useful driver for our Product teams since they were a concrete change that would have direct user impact - something to galvanise the need for action and a timeline to work to. Our Product teams were, of course, all more than a little bit aware of HTTPS and those teams who hadn't migrated already intended to migrate as time allowed. However, this helped a few of them with a business case to make the change, and the discussion helped bring HTTPS further into the general conversation.

The h2 carrot

'HTTP/2 all the things!' meme on a presentation slide.

A year or so on from my initial presentation about HTTPS, we began to think in more detail about providing HTTP/2 (h2) on our web edge since the support in web browsers and servers/services was mature enough by then. We did the requisite planning work, the usual comms to our teams and then rolled h2 out. We had a bit of an issue with this, and there was a fair bit of work involved but before long, all our HTTPS web pages were also available over h2 - an added carrot to the teams who'd not yet migrated to HTTPS.

Product migrations

Our Product teams have done the bulk of work in migrating Βι¶ΉΤΌΕΔ websites to HTTPS on their individual stacks. As well as in-place updates, there has been some major re-platforming work which is moving our Product websites on to new, HTTPS native platforms such as Web Core for Βι¶ΉΤΌΕΔ Public Service, Simorgh for Βι¶ΉΤΌΕΔ World Service and new, dedicated platforms for Βι¶ΉΤΌΕΔ iPlayer and Sounds.

I didn't get involved specifically in any of these Product migrations, aside from the odd conversation and friendly badgering, so whilst it was a lot of work and absolutely vital, it's relatively well-understood work. So, for the remainder of this blog post, I'll focus more on the aspects of our migration that were perhaps less obvious (and often really quite awkward).

Content retention - 'The Archive'

The Βι¶ΉΤΌΕΔ has a content retention mandate which states:

13.3.8 Unless content is specifically made available only for a limited time period, there is a presumption that material published online will become part of a permanently accessible archive and should be preserved in as complete a state as possible.

During a re-platforming in circa 2013-14, the decision was taken to archive (rather than migrate to the new CMS) a lot of the older content, which our retention mandate demands we keep online. The archive was produced via a crawler which saved web pages to online object storage as flat HTML and asset files. We ended up with somewhere in excess of 150 million archived web pages across hundreds of retired Products - all of which were captured as plaintext HTTP.

An archived web page: Βι¶ΉΤΌΕΔ Sing.

Accurately migrating this many wildly differing static pages to HTTPS is not simple. Some quick maths and thinking-through eliminated the option of writing a crawler to run through the archive and update the HTML, JavaScript and CSS in-place - it's too risky, slow and expensive. Instead, I used our comprehensive access logging/analysis system, , to make sure that clients supported it then trialled allowing HTTPS on a section of the archive whilst adding the header to instruct clients to automagically upgrade HTTP links/asset loads to HTTPS.

The trial worked well, so we gradually rolled this out and monitored the effects via access logs, the CSP and elements of the .

Robots.txt and friends

The final major hurdle we encountered was in serving global static assets - robots.txt, sitemaps, 3rd party authentication files and the like. We were still using our previous-generation traffic managers to host global static assets, and the configuration was unexpectedly coupled to our HTTPS allowlist logic. That wasn't a problem in itself, but it meant that when I asked one of our ops teams to remove the HTTPS allowlist, the serving of these static assets broke. Time for a rethink.

Our 24/7 support/ops team valiantly stepped in to build and run a new service that solved two problems in one - migrating the routing of global assets to our new traffic managers in a single-scheme fashion.

Removing the HTTPS allowlist

Once the robots.txt (et al.) problem was solved, we could finally remove the HTTPS allowlist, which meant that all content on www.bbc.co.uk was available over HTTPS. That was a really key step in this whole process.

HSTS

Once we had all our content on www.bbc.co.uk available over HTTPS, we began rolling out , which instructs to silently upgrade any plaintext HTTP links they come across for www.bbc.co.uk with HTTPS links. So that we can gain confidence and revert in a reasonable time if there are problems, we'll gradually increase the max-age on our HSTS header as follows:

- Set to 10 seconds, then wait for 1 day (basic test for major issues)

- Set to 600 seconds (10 minutes), then wait for 2 days (covers most page-to-page navigations)

- Set to 3600 seconds (1 hour), then wait for 4 days (also covers most iPlayer/Sounds durations)

- Set to 86400 seconds (1 day), then wait for 14 days (covers frequent users day-to-day)

- Set to 2592000 seconds (30 days), then wait for 6 months (covers most users)

- Set to 31536000 seconds (1 year)

To de-risk HSTS, as well as all the work above, progressing HSTS through our pre-live environments and some theoretical analysis, we used the Chrome net-internals facility to locally add HSTS for www.bbc.co.uk.

Assuming the HSTS rollout goes to plan, we'll look into for www.bbc.co.uk to avoid the ToFU (Trust on First Use) issue.

What's left to do?

Having jumped over most of the hurdles in our way, the last few jobs to do right now are:

- Use the "force HTTPS" feature in our traffic managers in conjunction with the already deployed CSP "upgrade insecure requests" on our archived web pages to ensure archived pages and their assets are loaded over HTTPS.

- Inform our Product teams that they can opt in to using the "force HTTPS" feature and therefore remove their own HTTP → HTTPS redirects in their origin services.

- Migrate the remaining couple of websites on www.bbc.com which are still plaintext HTTP, then roll out HSTS on www.bbc.com as well.