Software Engineer Matthew Mould explains the decision making process and strategy behind the build of the Βι¶ΉΤΌΕΔ's new Android Media Player.

Users of the Βι¶ΉΤΌΕΔ’s Android mobile applications are familiar with the need to install the separate application to play multimedia content. This application uses Adobe's proprietary technology to play back media encoded in RTMP and HDS media formats. The Βι¶ΉΤΌΕΔ has been moving towards an open-source streaming format called DASH (Dynamic Adaptive Streaming over HTTP), and I have been working in a team to develop a native Android component called Standard Media Player for Android (SMP-AN), which uses Google's open-source ExoPlayer to play back DASH content. This component will be integrated into the wide range of Βι¶ΉΤΌΕΔ applications available via the Google Play store. It’s a more efficient technology for the Βι¶ΉΤΌΕΔ to use, thanks to its use of open standards, and removes the need for users to install a separate application - something which has long been a source of complaints.

We built the player as an application component, with a codebase to which integrating clients (Βι¶ΉΤΌΕΔ iPlayer, Βι¶ΉΤΌΕΔ News, etc.) could either contribute, or use out of the box. In building this component, we emphasised comprehensible, robust, flexible and well-tested code. The following offers an insight into some of the architectural decisions that we made while building it.

Stability of API

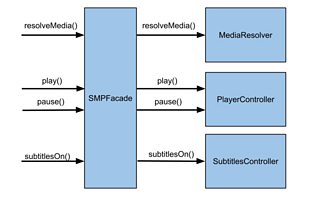

A key factor in producing an easily usable software library is to maintain a stable API. We therefore began our design by creating an interface called SMP (Standard Media Player), which exposed all of the methods that we wanted integrating clients to access such as play, pause and seek. At a top level this interface is implemented by a facade class called SMPFacade, a thin class that delegates calls down to the appropriate classes.

Clients therefore require no specific knowledge of the underlying architecture of SMP and we retain the ability to separate our code into an appropriate class structure.

Model View Presenter

In broad terms, we wrote SMP following a (MVP) pattern, with a passive view. Our model exists with no knowledge of the view and the view layer is, in effect, a client of SMP. An integrating application can therefore flexibly use a bespoke view layer to control content, and use the player to play either audio-video or audio-only content with no changes required at all. Our tests reflect this division, with detailed unit tests covering the core player functionality and a small number of very simple tests covering our thin UI layer.

The fact that we had such thin UI tests circumvented the pitfall of ‘flaky’ UI tests that developers usually end up ignoring, and in keeping the UI tests small in number, we avoided the anti-pattern in favour of a .

Test Driven Development

We were strict in our policy of Test Driven Development (see Growing Object-Oriented Software Guided By Tests by Freeman and Pryce). At the beginning of the project, because domain concepts and appropriate levels of abstraction weren’t immediately obvious, we wrote tests over slightly inconsistent test boundaries and reshaped tests as the domain became more clear. This approach is similar to of using small tests to explore an approach before consolidating them into more logical units, or deleting those that become obsolete.

When developing a new feature later in the project, such as subtitle capability, we wrote tests from the outside in - writing a test that interacted with SMP as if it were a client. We then wrote the simplest code to get the test to pass, immediately committed (and pushed) the code, and safe in the knowledge that our tests would go red if we broke any behaviour. This wasn’t an infrequent occurrence, but because our commits were very small it was easy to revert and try again.

Often in the first stage of getting a test to go pass, we would write code in the aforementioned SMPFacade class, but during refactoring we would force a new domain concept to emerge to which calls would be delegated. This latter step maintained SMPFacade’s status as a facade class. For more details on the logic behind what we chose to change when refactoring, take a look at .

Test objects and mocks

We mocked only the boundaries of our system, which was represented by an ever-shifting diagram on a white board next to our desks (see this article on ), so that to the greatest possible extent our tests were independent of our production code, rather than being clamps around every class which had to be removed and reattached for each refactor. We avoided the use of mocking frameworks and instead created fake objects for our tests.

As part of this strategy, we were able to avoid setting up mock objects in each of the tests in which they were used and consolidated mock behaviour into single objects, making the tests more comprehensible. This also aided us in the avoidance of DRY violations, as such violations would present a nuisance early in the development process and demand our attention. For example, if the same three steps need to be in the setup of every test, this suggests that there should be a class for that. Occasionally we went as far as writing contract tests that asserted that both our mock objects and the real domain objects behaved in the same way, for example with our adapter for SharedUserPreferences.

public abstract class PersistenceContractTest extends AndroidTestCase {

@Test

public void testThatReadingAnUnsetBooleanReturnsFalse() {

Persistence persister = getPersister();

assertFalse(persister.readBoolean("unset_value"));

}

@Test

public void testThatWeCanReadWhatWeCanWrite() {

Persistence persister = getPersister();

String key = "valid_key";

boolean value = true;

persister.writeBoolean(key, value);

assertThat(persister.readBoolean(key), is(value));

}

abstract Persistence getPersister();

}public class SharedPreferencesContractTest extends PersistenceContractTest {

@Override

Persistence getPersister() {

SharedUserPreferences sharedUserPreferences =

new SharedUserPreferences(getContext());

sharedUserPreferences.clear();

return sharedUserPreferences;

}

}public class FakePersistenceTest extends PersistenceContractTest {

@Override

Persistence getPersister() {

return new FakePersister();

}

}There were some lessons that we learned as our tests grew, typically around aspects such as a large amount of setup being required for each test. It is entirely possible that our test boundaries were too large. For developers who have been on the project from the beginning this could be great: total freedom to refactor safely! However, when welcoming new developers to the project some have found the amount of setup required for our tests to be a hindrance.

In an attempt to mitigate this, we created helper classes such as test object builders that, for example, recorded the objects they were creating to allow the tests to interact with mocks to simulate real world behaviour. We also created a bank of test data builders that provided arbitrary test objects and have had interesting discussions on whether test data should actually have a random element in it.

The arguments in favour of this are that it would ensure that we do not rely upon ‘magic’ values in our code, so would be more likely to find edge cases early. The argument against it is that introducing a stochastic element to tests makes reproducibility more difficult. We haven’t introduced random test data, but also haven’t drawn a firm conclusion on this matter. Answers on a postcard.

Test naming convention

To make tests easier to consume for developers unfamiliar with the codebase, we attempted a naming convention that describes tests in terms of their context, with individual tests describing the action and the assert. This follows a similar concept presented in . For example:

public class WhenVideoIsPaused () {

public void setup() {

…

}

@Test public void

andStopIsInvoked_PlaybackStops() {

…

}

}State machine

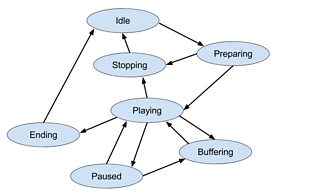

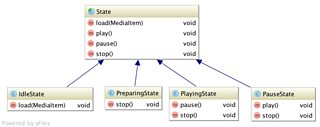

A media player is, by its nature, a stateful system. At any point the player can be playing, paused, loading or buffering, and only certain transitions are possible. Our reaction to this domain concept was to model our system as a state machine. The player transitions through each stage in its lifecycle, and is always able to react appropriately to messages from the outside world, be it with the appropriate transition or with no operation.

For example, when in a paused state, calling pause does nothing but play prompts a change to a playing state. Initially, we used if/else clauses to model the state machine, as part of our ‘quickest route to green’ strategy. Later, we introduced state classes, in which individual states inherited from a parent State superclass with default no-op implementations of all methods on the interface, so individual pieces of behaviour could be implemented in a bespoke fashion for each state.

Event Bus

At the beginning of the project, we discussed the use of an event bus, concluding that we would use one to announce events such as seekCompleted which would need to be written to developer logs, to our statistics systems or to client features external to SMP such as . All of these concepts are independent of the inner workings of SMP, so it made sense for them to subscribe to output events without the core player itself needing to be aware of them.

We avoided leaking our event bus into the UI code or into tests so we would be free to either change its implementation or abandon it altogether. The event bus was unit-tested in isolation to ensure that it was behaving as expected. Initially our event bus was a singleton, as this was the simplest solution and there was no need to support multiple media players in the same application in our first release. We gradually refactored away from this, and now have one event bus per instance of SMP.

Primitive Obsession

An aspect of our codebase that has made it considerably easier for other developers to use was our avoidance of . Primitive obsession is a situation that emerges when primitives are used to pass around domain concepts, . The first issue that this can cause is a lack of clarity over what variables represent as they move through the system. Does int duration represent time in milliseconds, seconds or years? The second issue is that methods with multiple arguments will be more likely to suffer from , which makes it tremendously easy to start mixing up variables. For example, you could easily pass arguments in the wrong order to a method with the following signature with no compilation error.

void showProgress(int mediaPosition, int mediaDuration)It’s clearer to pass MediaProgress objects that hold information on both the unit and meaning of domain concepts. The same applies when passing around String programmeTitle and String episodeName. You will never want a programmeTitle to be used as an episodeName, so why not make use of type safety and push these concepts into classes ProgrammeTitle and EpisodeName?

An exception to this was with UI code in Android, where we avoided passing domain objects into the UI entirely due to a limitation in the refactoring tools in Android Studio. If the Test Artefact is set to ‘Unit Tests’ rather than ‘Android Connected Tests’, and one uses the refactoring tools to change a domain object, the code in the connected tests will not be changed, which makes it easy to inadvertently break the build! However, Android Studio is frequently updated, so that problem may have been solved by the time you are reading this.

Inheritance vs. composition

There is a lot of literature on the concept of ‘Composition over Inheritance’. In SMP we do use inheritance in some places, to a positive end. For example, with the states in our state machine and also in the classes that wrap primitives.

The arguments in favour of composition are that it increases flexibility and provides more informative domain objects. We fully accept both points, but rather than couching our standpoint as composition over inheritance, we take the slightly less dictatorial stance of ‘try composition first’. Composition will very often provide a better solution but inheritance does, in my opinion, have its place. As it was, we were careful to avoid inheritance until it was screamingly obvious that it was appropriate, such that we didn’t inhibit the flexibility of our code by introducing it at too early a stage.

Open/closed

Good code should be open for extension and closed for modification. An example of code that is not open for extension is something like the following:

if (mediaIdentifier.getClass() == A.class) {

findMediaUsingStrategyA();

}

else if (mediaIdentifier.getClass() == B.class) {

findMediaUsingStrategyB();

}If I add a mediaIdentifier of type C, I then need to add another strategy and another clause to my if statement. Alternatively, using a registry we can have:

mediaStrategies.getStrategyFor(mediaIdentifier).invoke()In this case, there is a piece of code for finding media that will not need to change when you add another media type. A further extension of this, not yet implemented, would be to make the media identifier able to contain the information on how to resolve its own media. That way, adding a new media identifier could be done without making any code changes to the media resolution pipeline itself.

Dependency inversion

We’ve had a very good run at inverting our dependencies in SMP, and to a degree we’ve managed it. Our media selection, our statistics providers and our monitoring rely on SMP, not the other way round. However, at the core of SMP lies ExoPlayer, and SMP really does depend very heavily on ExoPlayer. The lifecycle of SMP was informed by the requirements of ExoPlayer, and in its current state we would be unable to arbitrarily substitute ExoPlayer for another media player. SMP knows all about its preparation lifecycle and implementation details such as ExoPlayer’s track renderers. We’ve no immediate plans to address this but if we ever do need to change the underlying solution of SMP we’d have to turn our architecture into something more resembling a . It’s possible that attempting to invert that dependency too early would have been detrimental to our solution.

Final thoughts

Throughout development SMP has remained deployable and developers have found SMP to be an easy codebase in which to work. I think that both of these factors are indicative that we got something right with our approach. We’ve been able to add new features without needing to perform shotgun surgery on the codebase, and potential bugs that were introduced have usually been caught by our tests before they had the chance to scuttle beyond a developer’s laptop.

That’s not to say that we’ve been completely free of bugs. Generally when we’ve missed something, it’s been on the edge of our system. Perhaps it’s important to be mindful that even when the core of the system is well supported by a thorough rack of tests, there’s always some things that you’ll miss, and that’s why we’re eternally grateful for our attentive software testers. We’ve also had issues highlighted by our beta users, who were warned by the beta label that things may not work perfectly, and were able to provide feedback to the Βι¶ΉΤΌΕΔ before we launch SMP to a larger audience.

In terms of things I’d have done differently, I think smaller tests might have made it easier for new developers to get started, but based on previous experience I’d rather have tests that err on the side of too large than those that get in the way of development by pinning down a codebase. I’ve also spoken mainly about our architectural approach. There’s a lot more discussion to be made on other aspects of software development: on the relationship between product, test and dev; on knowing when to push back on acceptance criteria; on interacting cohesively with other teams; and on early delivery of a minimally viable product. All of these have been important factors in the success of the SMP project.

Thanks to Ross Beazley for technical leadership on this project and significant contribution to this article.