In 2009, iPlayer became the first video on-demand service to offer Audio Described content. Audio description makes video content more accessible to blind and visually impaired people by explaining what is happening on screen. This helps viewers with visual impairments to follow what is going on.

Back then, iPlayer was a catch up service for the Βι¶ΉΤΌΕΔ: programmes would only be available on-demand for a limited period after they had been broadcast and recorded from terrestrial TV streams. Since then, iPlayer has evolved, allowing programmes to be made available before they are broadcast. This is made possible through our file-based delivery workflow which has been in place since 2013 and enables pre-recorded shows to become available on iPlayer as soon as or before they are broadcast on TV.

Unfortunately, as of 2018, audio described content was still living in the catch-up paradigm, where content was only made available on iPlayer after it had been broadcast. This meant that old shows, Doctor Who for example, made available again as box-sets on iPlayer, but not scheduled for the TV, did not have their audio described version made available.

This was a frustration for our viewers who could regularly access audio described content on iPlayer, but would find some programmes missing their audio described version even if it had been available in the past, or was to be broadcast in the near future.

With Killing Eve season 2 released on iPlayer as a box-set with all episodes available with an audio described version, I felt it was a good time to tell our side of the story. This blog post will cover some of the tech we used to make audio described content available on iPlayer.

The media files

To create audio described programmes we need two types of media.

Video file of a scheduled programme (MXF file)

We receive a video MXF file, from the production team who made the programme, in a very high quality, high-bitrate . This is a format developed in conjunction with other UK broadcasters for file-based delivery workflows. The MXF file contains the video and the original programme audio without audio description and is used to create all the different formats needed to stream or download our content. It may be in stereo or surround sound, standard definition or HD. You will see that the audio described version of the programme is also created in the AS-11 DPP format with the same quality and features as the regular programme but with audio description dubbed in.

Audio Description Studio Signal (AD WAV file)

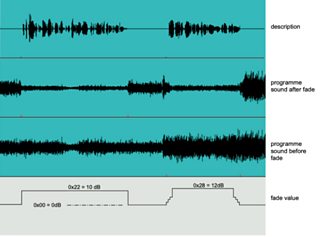

An audio description studio signal is produced and delivered to us by our partner Red Bee Media in a stereo WAV format. The left channel contains the audio described voice track, with no original programme audio and the right channel is control data, not something you’d want to listen to unless you find a fax machine weirdly soothing. The control channel is used to dip the original programme audio so as to ensure the audio description is audible over any background audio in the programme. This paper from Βι¶ΉΤΌΕΔ R&D has more detail on the audio description studio signal.

The media tools

To do the mixing we use the following tools.

Gstreamer

is an open source multimedia framework that we use with an developed by David Holroyd. The plugin only works on audio so the original programme audio must be extracted. It mixes the original programme audio and the audio described voice track, adjusting the gain of the original programme audio as signalled by the control track so that the audio description can be clearly heard. The output is a single mono track so this has to be run twice, once on the left audio channel and once on the right audio channel, for a stereo programme.

Principles of fading original programme audio during description passages. Source: WHP051

BMX

is an open-source project from Βι¶ΉΤΌΕΔ R&D to read and write MXF file formats in common use at the Βι¶ΉΤΌΕΔ, written by Philip de Nier. It has had many uses in the Βι¶ΉΤΌΕΔ over the years, most recently as a widely-used engine for generating DPP files. We use the following utilities from BMX to create our audio described content:

- bmxtranswrap to extract the stereo audio channels from the MXF file and convert them to uncompressed WAV format.

- mxf2raw to extract the MXF metadata and raw essences, which are the video and audio elements.

- raw2bmx to assemble an MXF from the metadata and raw essences

The process

The following steps outline how we use the media files and tools to create an audio described version of a programme. These are the instructions implemented in our code that we deploy on EC2 instances in AWS.

Preparing the AD WAV file

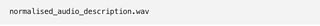

We receive AD files in a mixture of 16 and 24 bits, so we normalise them all with Gstreamer to 16 bit. We now have:

Extracting the audio from the MXF file

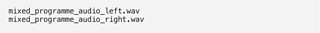

First, we extract the left and right audio channels from the MXF and convert them into separate mono WAV formatted files.

Each audio channel starts with what is called a “handle” — a term for a sequence of tones used for broadcast timing. The video also includes a ticking clock during this handle period. We need to remove the handle from the audio before we can mix, as the AD file does not contain the handle stage.

So we extract the handle and store it for later. Finally, we extract the audio after the handle to give us just the original programme audio. The audio is now ready to be mixed.

Mixing the audio description with the original programme audio

The Gstreamer plugin then mixes the original programme’s left and right audio channels with the audio described track. The handle is then prepended to both channels so that they are now in sync with the video. This creates our final mixed audio described tracks ready for reassembling.

Extracting essences

We need to extract all the essences from the original MXF file to then insert the mixed audio described tracks. The essences will consist of the AS-11 metadata, one video essence — the main bulk of the MXF — and the audio essences. The essences look like:

- Metadata: as11-core.txt, as11-dpp.txt and as11-segments.txt

- Video: essence_v0.raw

- Audio: essence_a0.raw essence_a1.raw

Assembling the final MXF file

The mixed audio described tracks are used in place of the audio essences, but all other original extracted essences, video and metadata are used to construct the new audio described MXF. We now have our audio described MXF that is used in our file-based delivery workflow to create the compressed assets that can be played in iPlayer.

The quirks

Performance

Downloading, uploading, extracting and assembling media files is a slow process. This is due to the size of an MXF file. An hour-long HD MXF file will be around 60GB. Processing a file of that size takes considerable time. We took some steps to reduce the processing time by using an i3.large instance for its high network performance and high I/O performance with SSD storage.

With these features, an instance can still take over 40 minutes to complete one mix. This raises a problem for auto-scaling instances.

Auto Scaling

It is common for our components to scale out when work piles up in the form of messages on our instance’s input queue, similarly scaling in when work is low. We want to prevent scaling in from terminating an instance that is currently processing one of the messages and doing work.

allows an instance to protect itself from a scale in event by requesting protection from the AutoScalingGroup API. When one of our components will have a long running process like creating audio described content it will protect itself when it starts processing a message, then removing that protection after the message and work has been completed.

Long-term storage means long-term costs

To reduce storage costs for the Βι¶ΉΤΌΕΔ, once a programme’s availability has expired on iPlayer, only the original MXF file is kept for long-term storage. All other versions of that programme are removed, including the audio described version. This is why in the past audio described content that was previously broadcast did not reappear on iPlayer when its original version was made available again.

This is no longer a problem, because the audio description studio signal is now kept in long-term storage. An audio described version of a programme is now recreated when ever the programme is rescheduled for the iPlayer. This is more cost effective than storing the audio described version.

Testing with Docker

The majority of our micro-services don’t manipulate media; that is done with on-site hardware or third party cloud transcoders. The audio description workflow is different. It relies on the tools mentioned above: tools that only a few of our developers have installed locally. We could have mocked the calls and just checked the functionality of the code, but we wanted something a bit more robust.

we are able to run our code and tools as if they’re running on an EC2 instance, thereby testing the whole audio description workflow locally and in our continuous integration workflow. We do this with a base image of our underlying EC2 instance’s OS and installing our project as an RPM. When testing, we overwrite the compiled jar on the image with a local copy to avoid rebuilding the image.