We're learning what a cloud-based media production system should look like - by building one.

Project from 2018 - present

Βι¶ΉΤΌΕΔ R&D has spent a number of years looking at how general purpose computing and networks can change the broadcasting industry with projects such as and our . We also took a first step into the world of cloud-based production during our work.

Our cloud-fit production architecture project is building on these foundations, and exploring what a media production system built 'cloud first' should look like.

We aim to answer this question by prototyping a cloud-fit production system and releasing the results of our work as we go.

Why are we doing this?

The Βι¶ΉΤΌΕΔ is increasingly using Internet Protocol (IP) in its day-to-day business of making content for audiences. Thanks to projects such as , we are now starting to use IP technologies (e.g. and ) in production centres (for example the new ). However, the content-making capacity and equipment in these facilities is still mostly fixed during the design and fitout stages, meaning large changes can only occur during a re-fit. The business operating model of a current generation IP facility is also fairly inflexible, with large capital expenditure required upfront.

At the other end of the chain, IP is also becoming increasingly popular as a mechanism to distribute our content, with services such as gaining audience share each year. Our current generation of iPlayer makes of to move files and streams around, but it can't undertake any production operations: content still has to be created using traditional broadcast equipment in a physical production facility.

R&D are working with colleagues from around the Βι¶ΉΤΌΕΔ to join up these two areas, enabling broadcast centre-style production operations to occur within a software-defined cloud environment. We think the benefits of this will be huge, making our physical IP facilities even more flexible, and enabling us to deploy fully production systems on demand. Ultimately, this will help the Βι¶ΉΤΌΕΔ make more efficient use of resources and deliver more content to audiences.

The technical details: what does 'cloud-fit' mean?

In order to join up the worlds of broadcast centre production and the cloud, we need to understand how to take the high-bandwidth point-to-point signals that are used in our physical facilities and turn them into something that works well in a cloud computing environment. We think this requires a complete reinvention of how we handle media: traditional broadcast centres are typically built to move media in a manner, yet (broadly speaking) clouds achieve much of their flexibility, scale and resiliency by exploiting .

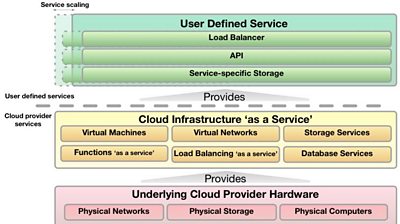

We think that a cloud-fit production system should be highly parallel and treat computing, network and storage as abstract, resources. Furthermore, system architects will no longer talk about building and deploying applications or devices, but services. A cloud service is a user-defined, distributed, unit of functionality, that depends on dynamically provisionable underlying cloud components. Each service will provide an , which we can use to give it production instructions.

Services will not depend on specific instances of an underlying resource (e.g. a particular , or a particular IP address), but use abstraction and enapsulation principles such as load balanced APIs and to provide parallel, , and subsystems.

Going even further, might enable us to provide API functionality without having to manage any underlying at all (of course in reality the infrastructure still exists, but the cloud provider manages it on our behalf in response to demand).

All of this adds up to something quite different to a conventional broadcast facility, where single instances of processing devices (such as a video encoder) are connected with point-to-point signals (rather than APIs).

Building production capabilities

We are building a system that is and . Each of our services has a well defined purpose and multiple services collaborate together in order to achieve complete production workflows.

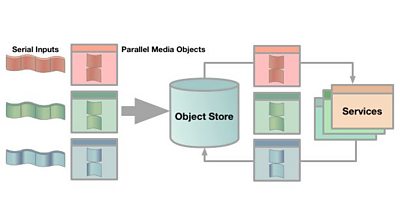

Our core hypothesis is that our system will work best if all media is stored by default: a distributed allows us to store and reference small pieces of audio, video and other data. We call these 'media objects'. By passing references to media objects between services we can build complex production functionality in a distributed, scalable and resilient manner.

Bridging the live/stored divide

Storing everything doesn't mean we can't do live production: live simply involves operating at low latency. In our system, live workflows (such as capturing cameras, vision mixing them and delivering the result) will be achieved by using media objects soon after they are stored. We can also force the objects to have a short duration to reduce the latency incurred whilst creating them.

Conventional broadcast centres use very different systems to handle live and stored media. However in our API-driven system, there is no longer a formal distinction between these operating modes: we think our media objects can provide the best of both worlds. This will mean users do not have to decide upfront how they want to use their content, and can benefit from that straddle live and stored workflows.

Achieving studio quality

In our previous Lightweight Live work, R&D looked at how public cloud computing could support low-cost production use cases, such as processing low-bitrate mobile phone footage from a remote location. In our cloud-fit production architecture work, we want to prove we can build a general-purpose system that can also handle studio quality uncompressed video. In order to achieve this, R&D is investigating how we can use on-premise private cloud solutions to provide the bandwidth and edge connectivity we'll need.

We don't think cloud-fit production is just about making low quality web video: it is about understanding how we can design future facilities, and increase our existing production capability with commodity cloud services.

Common data models

Cloud-fit production architecture is just one part of Βι¶ΉΤΌΕΔ R&D's work on the broadcast industry IP transition. Our vision is a future in which IP technology can seamlessly join up resources in broadcast centres, the cloud, and audience devices. To do this, we think it is vital to define common for content and production systems.

Our aim is to provide abstract ways of identifying pieces of media, as well as their relationship to time and to each other, independent of the implementation details of the systems used to manipulate and process them.

Outputs: Sharing as we go...

As well as working within the Βι¶ΉΤΌΕΔ, we want to share our research externally in order to contribute to wider broadcast industry discussions about cloud computing.

We've released some of the first low level building blocks of our work, as open source software:

Downloads

-

A python library for handling grain-based media

-

A JSON serialiser and parser for python that support some extensions convenient for our media grain formats

-

A simple timestamp implementation used by various other libraries

We will also be publishing as much as we can in blogs and papers.

- -

- Βι¶ΉΤΌΕΔ R&D - Cloud-Fit Production Update: Ingesting Video 'as a Service'

- Βι¶ΉΤΌΕΔ R&D - Tooling Up: How to Build a Software-Defined Production Centre

- Βι¶ΉΤΌΕΔ R&D - Beyond Streams and Files - Storing Frames in the Cloud

- Βι¶ΉΤΌΕΔ R&D - Storing Frames in the Cloud part 2: Getting them back out again

- Βι¶ΉΤΌΕΔ R&D - Storing Frames in the Cloud Part 3: An Experimental Media Object Store

- Βι¶ΉΤΌΕΔ R&D - High Speed Networking: Open Sourcing our Kernel Bypass Work

- Βι¶ΉΤΌΕΔ R&D - IP Studio

- Βι¶ΉΤΌΕΔ R&D - IP Studio: Lightweight Live

- Βι¶ΉΤΌΕΔ R&D - IP Studio: 2017 in Review - 2016 in Review

- Βι¶ΉΤΌΕΔ R&D - IP Studio Update: Partners and Video Production in the Cloud

- Βι¶ΉΤΌΕΔ R&D - Running an IP Studio

- Βι¶ΉΤΌΕΔ R&D - Building a Live Television Video Mixing Application for the Browser

- Βι¶ΉΤΌΕΔ R&D - Nearly Live Production

- Βι¶ΉΤΌΕΔ R&D - Discovery and Registration in IP Studio

- Βι¶ΉΤΌΕΔ R&D - Media Synchronisation in the IP Studio

- Βι¶ΉΤΌΕΔ R&D - Industry Workshop on Professional Networked Media

- Βι¶ΉΤΌΕΔ R&D - The IP Studio

- Βι¶ΉΤΌΕΔ R&D - IP Studio at the UK Network Operators Forum

- Βι¶ΉΤΌΕΔ R&D - Industry Workshop on Professional Networked Media

- Βι¶ΉΤΌΕΔ R&D - Covering the Glasgow 2014 Commonwealth Games using IP Studio

- Βι¶ΉΤΌΕΔ R&D - Investigating the IP future for Βι¶ΉΤΌΕΔ Northern Ireland