2018 was an important year for the broadcast production industry, with standards-based IP products coming to market, and broadcasters - including the Βι¶ΉΤΌΕΔ - starting to put IP at the core of new facilities. IP's flexibility should support R&D's vision of a new broadcasting system, but for that to happen, we've been tackling the practical challenges of interoperability with the new standards, and looking at what needs to happen next.

Βι¶ΉΤΌΕΔ New Broadcasting House in Cardiff under construction by (cropped) on Flickr, .

Βι¶ΉΤΌΕΔ R&D's vision of the future has IP - Internet Protocol - at its heart. That means more than delivering streams over your broadband connection to your TV, or to your smartphone, it also means changing the technology used in producing content. Part of our work on IP Studio has been about that, and what it means to the broadcast industry.

It's been a year since our last update in this area. - the organisation that brought the world standards for timecode, colour bars and the Serial Digital Interface (SDI) - had just published the main parts of , its suite of standards for Professional Media over Managed IP Networks. This showed that manufacturers were serious about providing an interoperable solution for the future. And it means that many parts of our research work over the last few years are available from the market.

Here's a summary of what happened in 2018...

It's a matter of timing

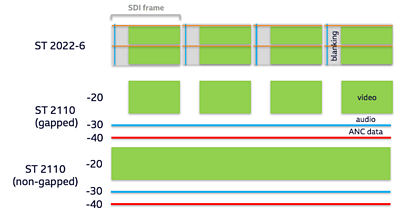

SMPTE ST 2110 differs from its predecessors in that it treats video, audio and data as separate streams, rather than simply "tunnelling" each frame (including unwanted blanking information) of SDI over IP:

This is a good thing, as it provides the flexibility to work separately with individual parts of the production media. This is essential for flexible reuse of content, and is something that we've been . But of course, we also need to be able to synchronise those parts as required. ST 2110 specifies how to do that...but only to a certain extent. To support our ideas on object-based media, we need to be able to identify where each part came from and when it was created. We are working with the Advanced Media Workflow Association (AMWA) on the model for this, and considering the fit with work happening .

There's another important aspect of timing when it comes to ST 2110. Uncompressed video frames take a lot of bits to send (over a gigabit per second for 1080i HD). If a sending device puts all those bits onto the network as fast as it can, problems may occur where buffers in network switches or receiving devices overflow. SMPTE have therefore published ST 2110-21, which models how the network switches and video receivers behave, and puts constraints on the "shape" of the traffic that devices can send. However ST 2110-21 is not a "one size fits all" solution, and it specifies both "narrow" and "wide" constraints, and the sending device indicates which one is being used. "Narrow" suits hardware with small buffer sizes, and is closest to how SDI works, while "wide" assumes larger buffers, which are more typical of software-based implementations. More on this later.

Sign up for the IP Studio Insider Newsletter:

Join our mailing list and receive news and updates from our IP Studio team every quarter. Privacy Notice

First Name:

Last Name:

Company:

Email:

or Unsubscribe

Bigger and brighter

UHD and HDR have featured heavily in R&D's work this year (e.g. World Cup on iPlayer), and we need to be sure our IP production facilities can cope. One of the selling points of IP-based infrastructure is to make it easy to extend to new formats. So ST 2110 supports UHD and HDR. But for where the huge data rates involved might be a problem, SMPTE are creating ST 2110-22, to allow for compressed streams. Of course we're making sure this supports VC-2 video.

Making connections

is AMWA's suite of specifications to allow parts of a networked media facility to work together. lets control applications find devices on the network and connects them. These are now stable specifications, and we are now starting to see these appear in shipping products, not just lab samples. The NMOS team is now hard at work extending NMOS to further common operations within a facility; these include working with (for example to a multiviewer in a studio gallary) and within connections (for example selecting Welsh language channels from a multi-channel stream).

Keeping a tally

AMWA recently published IS-07, the NMOS Event and Tally Specification for how to send time-critical event data over IP networks - for example, sending camera tally information. It has been designed from the outset to be "web friendly", while being usable alongside the other standards and specifications. IS-07 was developed by a group of our Incubator colleagues, and shows how we may collaborate in the future.

Making things secure

2018 was the year in which the industry has started talking about cyber security for IP-based facilities. EBU and AMWA have worked on requirements and proposed some solutions. Generally this means "follow best practice in the wider IT community", but there are some specific problems that productions can face when on location, without good access to the Internet. Of particular note is work we are doing on how to secure NMOS APIs.

We'll be holding an AMWA workshop early in 2019 to test implementations of NMOS security, IS-07, grouping and audio channel mapping.

In practice

In 2019 Βι¶ΉΤΌΕΔ Cymru Wales will move into its new broadcasting centre in Cardiff. This is the first significant Βι¶ΉΤΌΕΔ building that has an IP core, built on some of the technologies we've presented. R&D works with the technical teams commissioning the networks and equipment.

Solid foundations

One of the complaints we hear from those building facilities such as Cardiff is that there are so many possibilities for doing things wrong! As with any new technology there are many ways of doing the same thing.

It takes time for manufacturers and users to come together on a common set of technologies and specifications. That also includes "options" within the standards. For example, it is sensible to require all receivers to support both "wide" and "narrow" streams (as specified in ST 2110-21) to interoperate with the widest range of hardware and software senders.

Another example arises in audio equipment. Many audio manufacturers use , a specification from the Audio Engineering Society. This is similar to ST 2110-30... but there are differences and options. To help avoid incompatibility, recommendations on how AES-67 and ST 2110 can be used together are important.

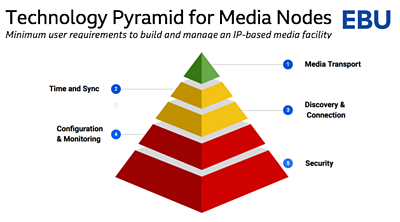

Addressing all this, the EBU has created the "", which provides guidance to what users require. The name refers to the following picture, and it comes with plenty of supporting detail:

The colours in the pyramid refer to the availability of these technologies in current products. These recommendations propose how the green and yellow layers can be achieved using SMPTE standards and NMOS. But the red parts show that the industry's journey still has some way to go. We will of course be involved in helping with this.

More info

See our page on IP Production Facilities for information about related projects, and for contacts.

-

Βι¶ΉΤΌΕΔ R&D - IP Production Facilities

Βι¶ΉΤΌΕΔ R&D - Industry Workshop on Professional Networked Media

Βι¶ΉΤΌΕΔ R&D - High Speed Networking: Open Sourcing our Kernel Bypass Work

Βι¶ΉΤΌΕΔ R&D - Beyond Streams and Files - Storing Frames in the Cloud

Βι¶ΉΤΌΕΔ R&D - IP Studio: Lightweight Live

Βι¶ΉΤΌΕΔ R&D - IP Studio: 2017 in Review - 2016 in Review

-

Automated Production and Media Management section

This project is part of the Automated Production and Media Management section