We recently released . VC-2 is a video codec, originally developed by Βι¶ΉΤΌΕΔ Research & Development which broadcasters can use to compress Ultra-HD content to fit through existing HD broadcast links without any perceptible loss of quality or added delays.

In this blog post, we'll describe how our new conformance testing tools can test VC-2 codec implementations more thoroughly. We'll also discuss the unique way in which our tools have been built to ensure that they themselves follow the VC-2 specifications as closely as possible. Finally, we'll share some of the bugs that our tools have already caught in real VC-2 implementations and even in the VC-2 standard itself!

-

Software tools for checking the conformance of SMPTE ST 2042-1 (VC-2) professional video codec implementations. [Github]

VC-2's design is patent and royalty-free and standardised in the catchily named '', which numerous manufacturers have used to build VC-2 into their equipment. To ensure all of these VC-2 implementations work together, it is essential that they follow the VC-2 standard exactly. To help manufacturers get things right the original specification was accompanied by a set of test files and procedures for testing an implementation's conformance. Unfortunately, due to VC-2's flexibility, these tests were far from comprehensive - a limitation we set out to fix by developing a new suite of VC-2 conformance testing tools and procedures.

Conformance tests made to measure

VC-2 supports an extremely wide range of applications ranging from low-latency compression within broadcast systems to long-term archival media storage. This flexibility means it is impractical to generate a comprehensive set of tests. Rather than just focusing on a few of the more common VC-2 configurations (as the previous conformance testing procedures did), we decided to build a tool to generate bespoke tests on-demand. Given a description of the basic features supported by a VC-2 implementation, our software produces a suite of test files that are 'made-to-measure'.

As well as fully supporting all possible VC-2 configurations, generating test files to order means we can exploit knowledge of a codec's exact specifications to probe the more obscure corners of VC-2's design. For instance, we can generate special test patterns . The image above features three of these test patterns, each aimed at a slightly different VC-2 configuration. It is clear to see that a one-size-fits-all approach to this kind of test is not possible.

Being pedantic

So far, we've talked about generating test inputs for codecs, but we also need to consider how we check that the corresponding output they produce is correct.

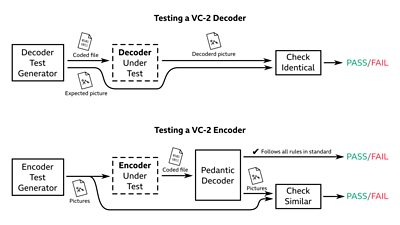

'Codec' actually refers to two separate things: encoders and decoders (hence the term). An encoder is the system that takes in uncompressed video and spits out a compressed (i.e. 'coded') version. A decoder carries out the reverse process of decompressing a coded data file into video again. Encoders and decoders are each tested differently, as we describe below.

The VC-2 specification strictly defines what a VC-2 decoder must do meaning that there is only ever one correct way to decode a video. Every decoder test file our tools generate is accompanied by the expected decoded output. Testing a decoder is then as simple as checking its output matches what our tools expected.

Testing a VC-2 encoder, however, is less simple as the VC-2 specification gives these a greater degree of freedom. As a consequence, there is no single 'correct' output for an encoder. Instead, all we can do is check that the encoded picture is sufficiently similar to the original and that the coded file follows all of the technical rules laid out in the VC-2 standard.

To check for similarity, we follow the same approach as previous VC-2 conformance tests: a manual visual inspection and an automated statistical comparison confirm that the picture quality is adequate.

The previous conformance testing procedures used the to check all of the coding rules had been followed by an encoder. This 'known good' (though inefficient) VC-2 decoder can correctly decode any valid file. Unfortunately, it is not designed to tolerate invalid inputs gracefully. Because of this, mistakes made by an encoder can result in opaque error messages from the reference decoder, or worse, no errors at all!

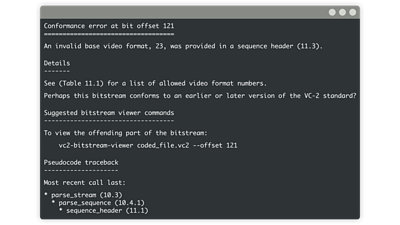

Instead of relying on the reference VC-2 decoder, we built a specialised decoder that pedantically checks that every rule in the standard is followed. When it finds a mistake, our decoder explains what rule was broken and where to look in the VC-2 standard for details:

Testing the testers

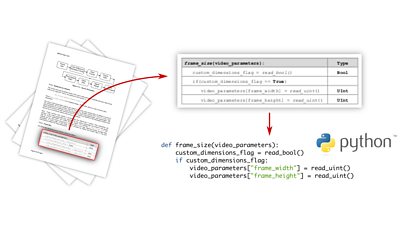

One of our top priorities was ensuring our tools remained consistent with the VC-2 specification since mistakes could have a knock-on effect on real VC-2 implementations. Though perfection is impossible (we're only human!), we were able to completely eliminate many kinds of mistake by constraining the way we built our tools. Perhaps the most significant design decision was to build large parts of the software directly using code snippets from the VC-2 standard itself.

The VC-2 standard, like many technical standards, uses snippets of 'pseudocode' (a made-up, simplified programming language) to describe many of the rules it sets out. In fact, it is possible to build a complete VC-2 decoder just by combining these snippets together and translating them into a real programming language. Furthermore, the pseudocode language used in the VC-2 standard is extremely similar to the well-known programming language, making it possible to automatically translate from one to the other.

We use a Python-translation of pseudocode in the VC-2 specification as the basis of many key parts of our software. Aside from saving us reinventing the wheel, this also makes those parts of our tools correct by definition!

Our specialised pedantic VC-2 decoder, for example, consists of the decoder from the specification with extra rule checks added wherever they're required.

We also built a specialised VC-2 encoder which is used internally by our tools to generate test files for decoders. Surprisingly, we were also able to directly implement a large portion of this encoder using the decoder code from the VC-2 specification. Unfortunately, the programming trick that makes this possible would fill a blog post of its own, but if you're interested, you can read about it in our tools' documentation.

To ensure that the code in our tools and the VC-2 specification remain in sync, we also developed a system that checks for inconsistencies between them. This verification step is performed automatically whenever we make a change to our code and caught numerous slip-ups during development. You can read more about the verification process in our documentation.

Of course, in addition to the design choices we made, we also followed standard software engineering practices, such as using automated testing to check that our tools behaved as expected. (In fact, these routines outweigh the tools themselves in terms of the volume of code.)

Letting our tools loose

We decided to use our tools to test two open-source VC-2 implementations developed by Βι¶ΉΤΌΕΔ R&D: the and the optimised / implementation. To our surprise, we found minor issues in both implementations.

Many of the bugs we found related to missing support for lesser-used features of the VC-2 specification, such as : A coded VC-2 data stream consists of a series of blocks that usually (but not always) begin by declaring their size. One of the tests generated by our tools which deliberately leaves out the block size caused the VC-2 reference implementation crash with an obscure error message.

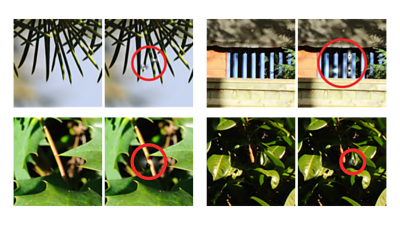

Other bugs were more subtle, for example, one found in the high-performance vc2hqdecode implementation. This internally uses 16-bit integers for most of its arithmetic. Roughly speaking, this means it assumed that all of the arithmetic necessary to decode a VC-2 stream would never need more than about five decimal digits. Unfortunately, resulting in picture corruptions. This type of bug can be extremely difficult to catch because it only affects a minuscule proportion of pictures. When corruption does occur, however, the resulting artefact can be fairly obvious, as illustrated below:

Our tests were able to detect this bug using some of the precisely targeted test patterns generated for this codec which we discussed earlier.

Finally, as well as finding bugs in VC-2 implementations, we also found some errors in the VC-2 specification itself. As well as a handful of simple typos, we also caught several minor logic bugs. These discoveries were an unexpected benefit of actually running the code in the standard, something nobody else had tried before.

As a result of our findings, we have since fixed many of these bugs. In particular, all of the bugs we found in the have now been fixed as part of a broader project to significantly improve its usability. We have also been working with SMPTE to fix the mistakes we found in the specification.

Summary

We’ve made it easier for manufacturers to test their implementations of the VC-2 video codec. Our new, more thorough testing tools and procedures have already been used to uncover bugs in real VC-2 implementations, which we have since been able to correct. These tools will enable manufacturers to test their VC-2 implementations better and give their customers, such as the Βι¶ΉΤΌΕΔ, greater confidence in their interoperability and performance.

Our new VC-2 conformance tools are open source and published on GitHub, along with a comprehensive user's manual describing the testing procedures.