At Βι¶ΉΤΌΕΔ Research & Development, we have shown how discrete media objects can be used to personalise and adapt to our audiences. Projects like Click 1000 and provide interactive and personalised experiences. The challenge is how to bring these innovative experiences into British homes without asking audiences to further invest in media hardware. One way, which we talked about when we announced our project, could be to use computers in data centres - the cloud - and stream experiences as video.

The past few weeks have proven to be a pivotal point for these low-latency streaming technologies. Microsoft has led some public previews of , their game streaming platform, on Android smartphone and tablets. Google has also made headlines by launching , a similar remote streaming technology that will work on a subset of devices with a Chrome web browser. Is this the moment where low-latency streaming technology becomes the next big thing?

Low powered devices like TVs and media streaming sticks would struggle to do the things a games console can do. By moving the hard stuff to the cloud and just sending video, companies are banking on the fact that they can grow the market for these experiences if you can use what you already have. Should the technology be viable, what could it offer for Βι¶ΉΤΌΕΔ audiences?

As we look at technology trends, we believe low-latency streaming has the potential to address some of the challenges presented by the delivery of complex experiences to a range of devices.

The Render Engine Broadcasting (REB) team within Βι¶ΉΤΌΕΔ R&D have been experimenting with low-latency streaming technology to see how it can deliver Object-Based Media (OBM) experiences at scale. We know that OBM experiences increase user immersion and engagement, and users have an appetite for them. Would this appetite grow further if they were available on any device, anywhere?

To find out, we have been experimenting. We've offloaded computationally intensive tasks such as the composition and rendering of OBM scenes to a powerful graphics card on a cloud server and streamed the results as video to user devices. By making use of high-speed internet connection and video streaming technologies, it is possible to stream any experience to very low-end devices. You can also interact with well-designed experiences with acceptable latency. We are calling this technology Remote Experience Streaming (RXS for short).

We started looking into low-latency streaming back in 2018 where we created an early prototype of RXS for the "Smart Tourism" Project. This trial recreated the ancient Roman Baths in a games engine, streamed it over a 5G network in the city of Bath to mobile phones.

The approach we have taken to develop these capabilities are centred around the idea of universal access: the streaming of a remote experience can happen on any device, no matter what its computational ability is. Our target platforms are all major browsers (, , , ), and native platforms (, , , ) and expect our demonstrator to look and behave in the same way on all of these platforms. For that reason, we have adopted a "Write once, run anywhere" approach. This means that the same code is used to create executables for each of our target platforms.

We have therefore made it possible for devices such as a laptop, an Android tablet or Raspberry Pi 0 to stream and display an experience rendered remotely.

We've done substantial work in the past year to create an extensible solution to our RXS system within a general-purpose player. We have been concentrating on keeping the latency as low as possible, to target a smooth user experience on any device, regardless of network conditions. With a 1080x1920 HD resolution and 60 frames per second, our Mac and Ubuntu devices display up to an impressively low 40ms delay. Although our lower-end devices, such as the little Raspberry pi 0, display a bit more latency, we are still able to provide quality experiences on all of our target devices.

Looking at technology trends in the field of low-latency game streaming has enabled the REB team to gain an understanding of how this technology might unlock use cases beyond the traditional games market. Using our research into RXS technology, we can make informed decisions on what the Βι¶ΉΤΌΕΔ can use this technology for and whether the Βι¶ΉΤΌΕΔ should invest in it or buy it, the sustainability of this technology and its impact on network, device lifetime.

The Full Picture

We believe that in the future, content platforms will have the ability to provide experiences of any format (video, interactive, RXS, and so on).

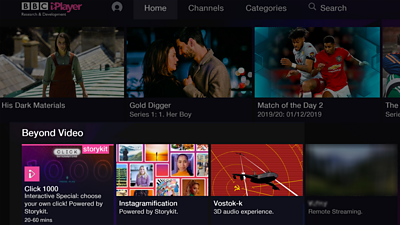

Imagine an iPlayer that allows you to do more than watch His Dark Materials, RuPaul’s Drag Race UK, or any of your favourite Βι¶ΉΤΌΕΔ shows. How about you direct how the plot unravels? Or you launch a high-end graphics game remotely from your device and interact with it as if it were running locally. Or you choose to play a camera-angle stream on a separate device.

- Βι¶ΉΤΌΕΔ R&D - Creating a Personalised and Interactive Βι¶ΉΤΌΕΔ Click with StoryKit

- Βι¶ΉΤΌΕΔ Taster - Try Click's 1000th Interactive Episode

- Βι¶ΉΤΌΕΔ R&D - Object-Based Media Toolkit

We have attempted to build such an iPlayer. Our demonstrator can run Click 1000, the interactive episode of the Βι¶ΉΤΌΕΔ's technology programme Click. You can navigate through the branching narrative episode with your mouse and keyboard, or indeed a gamepad or remote control when watching on a TV. In our prototype, you can also launch and play an RXS game in real-time, and navigate through the remotely rendered scene on your local device.

Our audience's needs are forever changing, and Βι¶ΉΤΌΕΔ R&D views object-based media as a key component in creating exciting new experiences that respond to our users' needs. We see internet delivery and streaming technologies as ways to address this challenge, and we've demonstrated that through our RXS technology and multi-format iPlayer.

- -

- Βι¶ΉΤΌΕΔ R&D - Object-Based Media at Scale with Render Engine Broadcasting

- Βι¶ΉΤΌΕΔ R&D - Where Next For Interactive Stories?

- Βι¶ΉΤΌΕΔ R&D - Storytelling of the Future

- Βι¶ΉΤΌΕΔ R&D - StoryFormer: Building the Next Generation of Storytelling

- Βι¶ΉΤΌΕΔ News - Click 1,000: How the pick-your-own-path episode was made

- Βι¶ΉΤΌΕΔ R&D - Object-Based Media Toolkit

- Βι¶ΉΤΌΕΔ R&D - How we Made the Make-Along

-

Future Experience Technologies section

This project is part of the Future Experience Technologies section