I've recently had the privilege of attending the World Cup in Russia and Wimbledon to help with the ΒιΆΉΤΌΕΔ's trial of delivering Ultra High Definition to the home. Some of my colleagues recently and and the have been interviewed about the technology in use. This blog takes a deeper look at the UHD HDR production.

Over the past couple of years we've been busy experimenting and reporting on tests we've undertaken to produce guidelines for production, ranging from what the reference monitor should be to what signal levels various common objects should be placed at in the video. We've tested our theories on a wide range of ΒιΆΉΤΌΕΔ productions from Planet Earth II to the FA Cup Final, and with external partners at a cycling event in DΓΌsseldorf.

Ultra High Definition (branded as Ultra HD, UHD and also sometimes incorrectly called 4k) is a toolbox of enhancements from which broadcasters can mix and match:

- A larger image raster, giving more fine detail (UHD1 = 3840Γ2160, UHD2 = 7680Γ4320),

- Higher frame rates (up to 120 fps), giving better motion portrayal (HFR),

- Wider colour gamut, allowing more saturated, natural looking colours (WCG), and

- High Dynamic Range, allowing the display of a wider brightness range with more natural highlights (HDR).

FIFA World Cup

The test used a feed produced at 3840x2160p50 with HDR by . We became involved in late February when the HDR feed was announced, assessing what we could do whilst remembering that the HD programme (from which SD is derived) is the primary delivery.

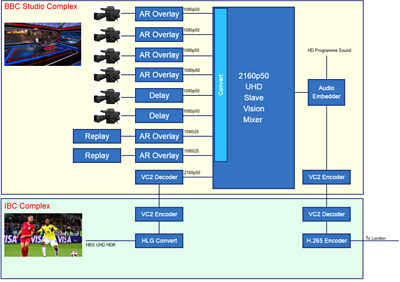

The ΒιΆΉΤΌΕΔβs Moscow operation was a two-location event, the studio complex was near Red Square and the FIFA International Broadcast Centre (IBC) was at a convention centre in the Moscow suburbs. At the IBC we received the UHD HDR feed from HBS. As my reported in a previous blog post by ΒιΆΉΤΌΕΔ R&D's Phil Layton, the production workflow for the World Cup was not native HLG as Host Broadcast Services had defined their own HDR workflow. A mixture of cameras were used; some were UHD HDR, some were HD HDR and some were conventional HD. At the IBC, I installed equipment to convert the HBS video format to using a transparent transform designed by ΒιΆΉΤΌΕΔ R&D.

The same IBC equipment was used to generate a fall-back stereo audio feed for use in case communication with the OB Scanner (the mobile production vehicle that contains the production gallery, vision operations area, sound operations area, etc.) at the studio was lost, through, for example, a major power outage.

The studio complex was run from a single OB Scanner. The additional studio augmented reality (AR - used to produce graphical overlays and virtual parts of the studio set such as the big window through which the football match is shown) and replay devices had already been commissioned when the HDR feed was announced and were limited to HD processing rates. Therefore, we decided to upconvert the HD video to UHD HDR, effectively inverting the camera "knee" used to control bright highlights such as the window behind the presenters and mapping in to the larger colour space.

A second, slave, vision mixer was used to mirror the cuts, wipes and overlays of the HD programme vision mixer. The cameras and AR processing were converted to ITU-R BT.2100 HLG video on the mixer input using a scene light conversion. βScene lightβ conversions are important when mixing between cameras using different standards (such as HD and HLG). The graphical overlays were on the other hand converted using a βdisplay lightβ conversion so that colours of branding matches that seen on HD. For further discussion of HDR conversions, please see section 10.

All conversions are exact mathematical conversions implemented in look up tables.

The HBS UHD HDR match feed, fed from the IBC using SMPTE VC-2 encoding, was also fed in to the slave mixer. This allowed the UHD vision mixer to completely replicate the programme produced in the HD vision mixer. Finally, the HD programme audio was embedded in to the UHD programme and sent back to the IBC on a VC-2 link and thence to London on a high bitrate ITU-T H.265 feed.

Wimbledon

The trial used a feed produced at 3840x2160p50 with HDR by Wimbledon Broadcast Services (WBS).

WBS used a native ITU-R BT.2100 HLG video workflow for the matches taking place on Centre Court in a similar manner to that used for the Royal Wedding. The vision supervisor for UHD was co-located with the camera racks engineers and was able to monitor and adjust all cameras live, monitoring both the conventional HD signal and the high dynamic range, wide colour gamut Ultra HD signal. The Ultra HD service contained a mix of Ultra HD and HD cameras, graphical overlays and full screen graphics, e.g. computer models of the ball in flight.

This service was delivered to the ΒιΆΉΤΌΕΔ HDR production area vision mixer. The vision mixer was also fed with an upconvert of the ΒιΆΉΤΌΕΔ presentation studio output. Compared to the World Cup workflow:

- The HD upconversions used slightly different hardware and were tuned by eye using a graphics processing engine as the current firmware version of the hardware does not implement the mathematical transform.

- The graphics overlays are not provided separately, so use the same scene light based transform as the incoming video.

The presentation studio is only in use for the UHD feed when it is exclusively discussing Centre Court. This means that the HD upconversions of the ΒιΆΉΤΌΕΔ studio are only used for the semi-finals and finals.

Lessons

It should be remembered that these were always designed as trials, and that throughout the trials we have been optimising various parts of the production chain.

- There were comments about the difference in brightness and colour saturation between the HDR trial service and conventional HD services. Some of this may be due to different HDR production styles between venues, and some may also be attributed to television setups at home and the natural βlookβ of the HLG HDR image.

- When dealing with numerous different types of camera, it is best to use βscene lightβ conversions as at Wimbledon.

- Weβve noticed some sports clothing appears to be both bluer and brighter than a theoretical white diffuse cloth, further work is required to understand this.

Whatβs next? We have done a UHD HDR HFR WCG NGA shoot (the cycling event in DΓΌsseldorf) but we havenβt done one live yet. Watch this space for further production tests...

-

Broadcast and Connected Systems section

Broadcast & Connected Systems primarily focuses on how ΒιΆΉΤΌΕΔ content reaches our viewers through broadcast and Internet delivery. This involves the whole broadcast chain from playout, through coding and distribution to consumption on the end-user's device. Our work typically covers a period from now through to 3 years out from deployment.