This week we focus on our Personalised Radio project, as the first prototype emerges and review other active projects in IRFS section.

. The resulting model is used in conjunction with the Tag versus Episode matrix to produce the recommendations.

Comparing the recommendations against actual user viewings, the performance was poor until we normalised the weighting of each genre and tag - achieving 41% Precision and 42% Recall. The precision is lower than our initial recommender, which used pure, collaborative filtering, but we have gained the ability to provide explanations. We believe this is a worthwhile trade-off.

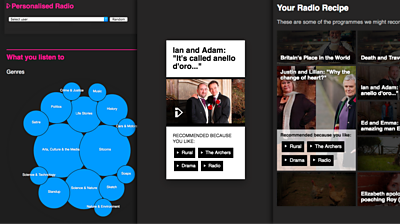

The first application of the recommender is a prototype web interface developed by Jakub, which allows users to log in using their Βι¶ΉΤΌΕΔ account and listen to a stream of recommended programmes. For each recommended programme, the interface exposes the reasoning behind the recommendation, e.g. which tags we think the user may be interested in. For each tag we also expose evidence in the form of a list of programmes the user has previously listened to with this tag.

Finally, we're prototyping a Recipe page which displays a grid of sample recommendations and allows the user to customise their "personalisation recipe".

Tellybox

The Tellybox project is our exploration of what TV could and should become in the next ten to fifteen years and the best our ideas are now being developed into exploratory iPlayer interfaces.

This Sprint Libby got a voice control interface working on the Tellybox prototype above, adding a Bluetooth hands-free adaptor to the Raspberry Pi. Libby and Alicia are now exploring how to make the back-end voice code (in Python) communicate with the front-end (in ) and researching the use of . So far, two in four of the Tellybox prototypes have been written in React. Alicia's next challenge will be the UX design and operation of the Children's prototype.

Talking with Machines

Andrew and Joanna have started some collaborative work with the UX&D Voice team, analysing the Orator tool that IRFS built as the production tool for The Inspection Chamber. The aim is to develop some UX recommendations to inform its next design iteration. They are interviewing stakeholders across the business to ascertain requirements and ambitions - to confirm our assumptions of who will be using Orator and the varying experiences that could potentially be made with the tool. The interviews will also inform other outputs, including a competitor analysis and User Journeys.

New News

The New News team has tested four adaptive news prototypes with five female participants, gaining some valuable feedback and is now iterating the prototypes ahead of Lab tests in Leeds at the end of February.

Speech and Vision

Nick has finally been able to track down the reason for the differences between his results and the earlier results and complete the evaluation of three different speaker identification libraries. He's selected the overall best and is now working on packaging it up so that it can be used by others, and producing a demo to show what it can do.

Ben and Matt have been wrapping up the audio feature extraction code using Python so that it can be used in the speaker identification work and elsewhere. They've been writing it so that it can be used in a streaming / online context. They're also wrapping existing tools to do voice-activity-detection and speaker change detection, both of which are required for speaker identification.

Meanwhile, Denise has been exploring how we might use text, in conjunction with face detection, for automatic people identification. The text could potentially come from subtitles or from speech to text systems. Each name reference found in the text is used to label the collection of detected faces and train a classifier.

W3C Standards

Chris joined an early morning conference call of the , to discuss plans for developing the protocols to support the Presentation API and Remote Playback API.

Following the 's last conference call, which focused on support for media timed events in HTML5, Chris has written an initial requirements and gap analysis document, and shared this with members of the Media & Entertainment Interest Group.

-

Internet Research and Future Services section

The Internet Research and Future Services section is an interdisciplinary team of researchers, technologists, designers, and data scientists who carry out original research to solve problems for the Βι¶ΉΤΌΕΔ. Our work focuses on the intersection of audience needs and public service values, with digital media and machine learning. We develop research insights, prototypes and systems using experimental approaches and emerging technologies.