Between the 6th and 10th of August the ICoSOLE project team travelled to a field in Belgium to take part in a technical trial at the Dranouter music festival. We all arrived equipped with servers, laptops, video cameras, audio equipment and enough cable to stretch across Belgium (or that's what it seemed like!). The following few days were intense, loud and hot, but very productive and interesting. In this post we will share with you what we got up to at this event.

As part of the EU-funded (Immersive Coverage of Spatially Outspread Live Events) collaborative project, the consortium is running field trials to try out our developments in real-life scenarios and obtain realistic test material.

The aim is to build a IP end-to-end system which allows the capture, mixing and spatialisation of multichannel live audio, syncronisation to acompanying video streams and delivery to audience facing IP networks for public consumption.

Last year we had our in very controlled conditions in Salford.

In order to test our systems in a more realistic scenario and to better understand the potantial challenges the would present themselves, the mid-project field trial was held at the the folk music festival. Set in a small Belgian village not far from the French border, we featured the main stage in our work.

We captured multi-track audio from the acts on stage, and turn it into a live stream which would accompany the video feeds that other partners (iMinds, VRT) were capturing. The audio experience isn't just a conventional stereo feed, the aim is to provide a novel immersive experience; giving the listener a 3-dimensional listening experience. This was achieved by using object-based audio where each audio source (instruments and singers in this case) is given some metadata that describes its position in a 3D space. These audio objects can then be converted to signals to drive different devices using loudspeakers or headphones either a set of loudspeakers or headphones using a renderer.

To enable this there was far more to this work than simply capturing some audio and rendering it, the key challenge here were to test how our ingest capture and rendering could be integrated into an IP based end-to-end production chain to essentially provide content to the audience.

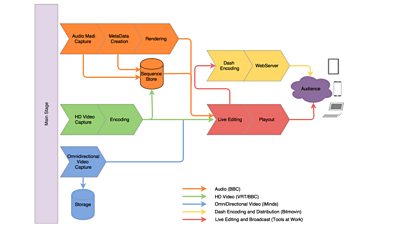

The distribution system used to make the captured audio available in another location (such as a TV studio) and render it into streams that are suitable for consumer devices. The system used to do this is Βι¶ΉΤΌΕΔ R&D's , which allows producing and distributing programmes in software on IP network. To give an overview of the setup we used, the diagram below shows how the different building blocks were connected.

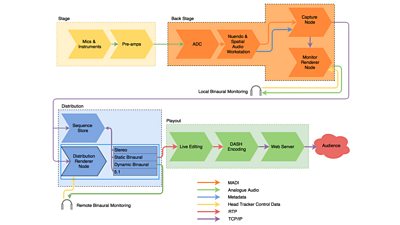

The microphone and instrument feeds from the stage were captured, pre-amplified and converted to a digital MADI signal and this was sent to a PC running Nuendo with a Spatial Audio Workstation (SAW) plug-in. This allowed us to make an object-based mix of the audio using normal gain, level and dynamics processes as well as giving each signal some positional information. These signals were then sent to the IP Studio capture node which then sent the audio & metadata grains (grain is an IP Studio term for a timestamped unit of content or data) to a rendering and monitoring node.

This monitoring renderer produced a binaural output so that the person mixing the audio could hear the head-tracked binaural output. The capture node also sent the audio & metadata grains over IP to a distribution renderer that could produce binaural, stereo and surround outputs suitable for further streaming. In a real-life setup this distribution renderer may be located remotely from the site, typically at the broadcaster itself. However, for this setup the server containing the renderer was in the same room as the capture server.

As well as streaming the audio for live output, we also recorded it. This is where the sequence store comes in; the audio & metadata grains are saved here with their timestamps to ensure correct synchronisation with the video that was also being captured at the event.

Integration with other project partners' work is now becoming a vital part of the project and this field trial allowed us to test the interface with other parts of the ICoSOLE technology. In addition to audio we also received video from on-stage cameras (thanks to VRT for that) via SDI which was fed into IP Studio and then sent via RTP to Tools at Work’s live mixer application which then synchronised the video with IP Studio’s audio mix using the PTP clock also provided by IP Studio. The output stream from the live mixer was then fed to an MPEG-DASH server setup by Bitmovin, which allowed the audio and video to be viewed live from the Internet via Bitmovin's website.

The end result was a syncronised stream which could be viewed and listened to in a web browser on a consumer device, all produced directly from a small field in Belgium with a modest internet connection. The audio format could be switched between binaural, stereo and a surround mix for both local monitoring and audience playout.

The other innovations the team tested at the event included iMind's 360 degree camera placed at the front of the stage (plus several other fixed cameras) and DTO's spatial audio capture using an Eigenmic. JRS and VRT also worked on User Generated Content (UGC) using video captured from volunteers' smartphones at the festival and combining it on large displays in the '' booth near the stage.

The ICoSOLE project is funded by the European Commission's Seventh Framework Programme ().